Refactoring monoliths to become cloud ready

Refactoring monoliths to become cloud ready

Taking your software to the cloud has a number of benefits that most organizations would love to take advantage of. These can include optimizing IT costs, horizontal scalability, improved security with regularly timed updates and security patches, adding health metrics and observability into your applications. Yet this utopia within our grasp remains elusive to many companies… But why? Forbes claims (and I happen to agree) that one of the biggest challenges facing organizations that are trying to take their software to the cloud is Refactoring.

Building monolithic applications was once the dominant programming paradigm in the not so distant past; and, as with all fads, there are unfortunate artifacts and reminders of those choices we made long ago. Now you, tasked with new business objectives, must take your successful monolith and make it cloud ready. What is the shortest path there?

Getting Cloud ready

Feasibility

There are several important terms here: serverless, containers, orchestration, service discovery, stateful vs stateless, wait what?

Let’s start with containers. Containers package your application, libraries, binary dependencies, and any other runtime requirements into a well-defined format. You could almost think of containers as turning your entire application and its dependencies into a single executable unit. The containers themselves are portable and can be run locally for developers, on a cloud provider’s infrastructure, or within your own data centers.

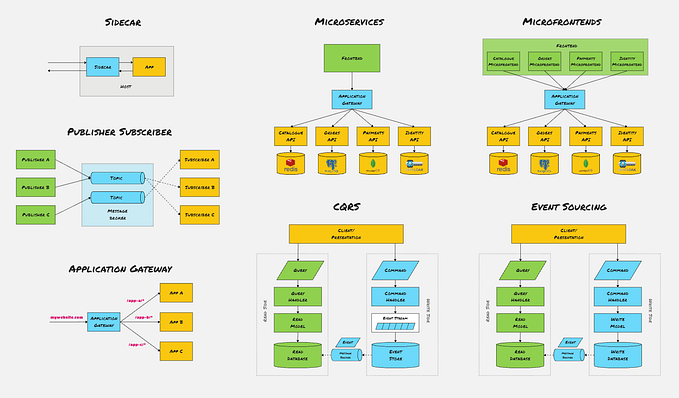

Splitting a monolith into microservice containers requires varying degrees of effort and always requires thoughtful care, but this approach can simplify ongoing development efforts. There are no hard guidelines about how to split your monolith apart. It largely depends on how modular your code base already is. Engineers on your team who are familiar with these logical separations might see natural fault lines that would require minimal effort to move components into their own services.

Once you have containers, you need a way to coordinate and run them — orchestration. Systems such as Kubernetes allow you to specify configuration such as how many instances (copies) of a service you would like to run. The orchestration tool then manages assigning those containers to resources (like VMs) and ensures the requested number of instances are running. They can also ensure new instances are started if one fails.

By splitting your monolith into containers, your orchestrator can scale your services independently, restart them independently, and deploy them independently. This can be beneficial for services which see a high amount of traffic on specific areas of their system. It may also help decouple dev teams work because they can iterate and deploy independently. Once split apart, you can leverage a variety of scaling strategies in order to optimize your resource utilization (and hence costs) and your development practices.

Orchestration systems are often complex and require knowledge to configure correctly. That is where serverless can help.Serverless systems provide a simpler configuration and deployment strategy, and they don’t require a complex orchestration system like Kubernetes. Some serverless systems, such as GCP’s Cloud Run, allow you to easily distribute requests between different versions of your service. Traffic splitting allows you to prove out new changes using canary deployments, A/B release strategies, and gradual rollouts. Depending on the traffic patterns, serverless systems may offer the cheapest costs as they are directly proportional to invocation time.

Service discovery is an essential, but easily overlooked aspect of your refactor — especially with auto-scaling capabilities. Service discovery refers to how your microservices will find and communicate with other microservices. There are a variety of approaches. The choice of runtime platform will impact this decision heavily, but it might be as simple as load balancers and DNS. Sometimes the orchestration system (such as Kubernetes) has well-established solutions.

The reality

Sometimes just arriving at a decision about what is your best option can be daunting. What we have found from working in this space for so long is that people are frequently thwarted by the complexity of getting there and instead opt for the lift and shift approach. Moving your services to VMs is the stop gap solution to becoming cloud ready. It will require the least amount of work as you have access to a local file system and all the required dependencies of your application installed and configured exactly as you need them. By moving to VMs hosted in the cloud you will begin to get some of the benefits with more generous vertical scaling and even horizontal scaling.

This might be enough to call it good. And for moving a complex monolith this might be the only palatable timeline of actual delivery to the business that they agree on. If you are not a company led by engineers your solution will likely lie somewhere at the intersection of your engineering team’s ambitions and what the business is open to. If you land in the camp that sees refactoring your monolith as an insurmountable mountain don’t lose heart.

We see many teams discount migrating to VMs first, but it should not be ignored. Migrating and then refactoring into containers running on VMs can be a great approach. This provides the immediate flexibility benefits noted above, and allows you to start refactoring the components that will most benefit into containers.

Doing the heavy lift (😮💨)

Many of us have been involved with refactoring code bases in the past. There can be a variety of reasons that prompted the refactor. Sometimes these are lightweight efforts to remove comments, encapsulate similar-ish logic with abstractions, addressing tech debt or reduce cognitive complexity. I have more than a few times found myself looking at a giant backlog of bugs and after recognizing that they are all related to a few related data structures decided that it would be much simpler to tackle them all at once. I have taken on this task both on product and infrastructure teams. Earlier in my career naivety led me to believe that future coders would write songs about me or upon completion a bronze bust would be erected in the employee lunch room. But the reality has been that this work largely goes unnoticed by the business with slightly more fanfare from fellow engineers. This is all fine and good but what does this look like for making our application cloud ready? When refactoring to become cloud ready there are a few large pieces to tackle.

State

Software components that are built for the cloud generally avoid holding state, unless that is their explicit function in memory. This can be counterintuitive and a departure from how you think about building software especially if you come from an OOP background. But it is generally good practice to avoid holding state whenever possible. In fact, many of the most nefarious bugs that I’ve uncovered have been because of poor, incorrect or totally unnecessary state management. Here taking a more functional programming approach can be beneficial where you think directly in terms of inputs mapping to outputs and chaining related operations together.

Messaging

Within a monolith, you can directly call functions in other components of the system. Once split into services, those calls will become RPCs or some type of message. The decision here comes down to how tight the feedback loop needs to be, scaling, and fault tolerance. RPCs are often easier to reason about, easier to handle errors, and easier to know when something is “done”… but adding many RPCs often carries negative impacts on performance.

Many monoliths already use some kind of a messaging system or queues to communicate between components of the application. If yours does then some refactoring of your communication channels to whatever one your cloud provider supplies, Google Pub/Sub, Azure Service Bus or one of the AWS equivalents, might hint at where you can cleanly split the monolith apart. While you can use the existing solution, the cloud native options are designed to play nicely with scalable consumers and producers. They’ll also reduce the maintenance burden (and likely cost).

Data

Everyone loves their data and most organizations hope to harvest more insights into their customers’ usage. This is generally a more modern day practice and most will have teams and a more modern infrastructure to support that. However, our monolith which is mission critical can have exactly zero downtime and probably is still kept in some kind of relational database.

Performing CRUD operations against your data often appears like it might be the biggest effort in refactoring a monolith, but this isn’t usually the case. If your monolith is built using some kind of ORM framework with a repository wrapper or facade that may greatly reduce the complexity and effort. Assuming that you don’t have direct database access, such as files littered all over the place with SQL statements, this can facilitate implementing clean API boundaries. If you don’t have a standard way of accessing data within your application this should probably be done first. Inconsistencies around data access will introduce bugs and make life for your devs living hell. Luckily this is something that can most likely be handled in isolation and without impacting other ongoing work. This pre-work is effectively putting an API around data-access — that’s helpful for modularity and critical for service oriented architectures.

Moving the actual data is another critical part of a successful cloud migration but it is out of scope for this post. There are many good places for your data to land and no shortage of utilities to help you move it.

Tests? Suite!

Write tests! Hopefully as you are refactoring there are some unit tests that protect business logic and help you avoid shooting yourself in the foot. If not, now is the time to do that. It can feel like a huge time sink but it is worthwhile because it becomes a defensible position that the code behaves as it should. As you build on existing work you will catch bugs that might otherwise be introduced during the process. Tests protecting against code regression also serve as documentation for other developers who will eventually be working in the areas. The final few things I’ll add about the value of tests is that they contribute to overall code coverage which many organizations are beginning to require and set minimum thresholds for.

The team/timing

You will need a team or at the minimum a few strong coders who are up to the challenge. They should have a history of being effective, delivering quality work on schedule. Unfortunately, for most organizations these will have to be people with a lot of technical expertise who are able to make important design decisions. While the idea of taking a strong resource like that away from existing work may not sound ideal, having someone with expert domain knowledge and a history of getting things done is a must if you want to succeed. While the stakes are high for these individuals the satisfaction from taking on such an engineering challenge can be really great. This type of win for a team or organization will foster good faith in technical leadership and build a culture of success.

Some applications can be done relatively quickly and behemoths can take much longer. I have seen it done in a few months and have been a part of projects that took years. Any responsibly led software development effort should have an expected delivery date but with enough flexibility to address unforeseen issues as they arise. However, starting out with a clearly defined strategy and having benchmark goals along the way will help you remain agile during the process. Check out this post by Mike Taylor about the challenges project managers face, and qualities of those that will deliver great results.

Yes we can! 🚀

Refactoring your monolith to make it modular enough to run as containers can be a challenging thing but is worthwhile.

If you need help developing your strategy for migrating your monolithic application to the cloud give us a call at Real Kinetic we would be happy to discuss how we can help and get you there.